WELCOME TO THE AUGMENTED COGNITION LAB (ACLAB)

At the Augmented Cognition Lab, we’re rethinking how machines perceive and understand the world, not by scaling up data, but by learning more from less.

Our mission is to build intelligent systems that can reason, predict, and interact meaningfully with the real world, even when data is sparse (i.e. small data domains).

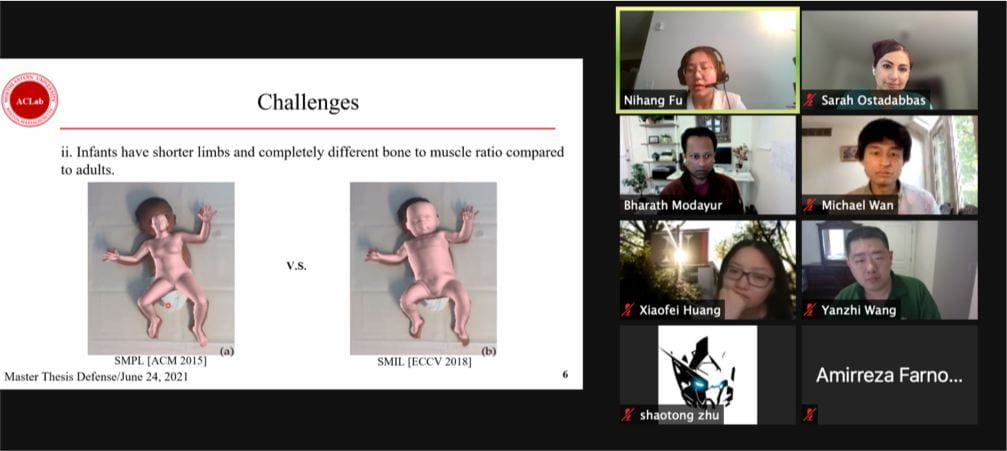

We specialize in video understanding with a focus on motion, because motion captures causality, intent, and dynamics that static frames simply can’t. Whether we’re studying infant behavior, animal interactions, robot navigation, or defense scenarios, our tools are built to work in environments where labeled data is limited or hard to get.

Rather than asking “how much data can we collect?”, we ask “how much structure can we extract from the data we already have?” By combining physics-inspired priors, generative models, and interpretable motion representations (like pose, trajectories, and temporal patterns), we bridge perception with cognition.

Our vision is to augment human understanding—not replace it—by designing machine learning systems that are:

- Data-efficient in small or sensitive domains

- Interpretable through structured representations

- Actionable in real-world scenarios

We’re excited by challenges where conventional deep learning hits its limits; and we see them as opportunities to innovate, collaborate, and push the boundaries of what AI can do with less.

ACLab Funded Projects

Research in ACLab is generously supported by the National Science Foundation, Department of Defense, Oracle, Sony, MathWorks, Verizon, Amazon Cloud Services, Biogen, and NVIDIA. For the full list of research project please visit: https://web.northeastern.edu/ostadabbas/research/

Our AR/VR technologies featured at NU Engineering magazine.

Our trip to Vermont to observe Total Solar Eclipse, April 8th, 2024.